Google Scene Exploration Searches The World Around You

At its annual I/O conference held today, Google announces an upgrade to multisearch that conducts simultaneous queries about objects around you.

With a new feature called scene exploration, Google strives to make search even more natural by combining its understanding of all types of information — text, voice, visuals, and more.

It builds on multisearch in Google Lens, introduced last month, and makes it possible to search entire “scenes” at one time.

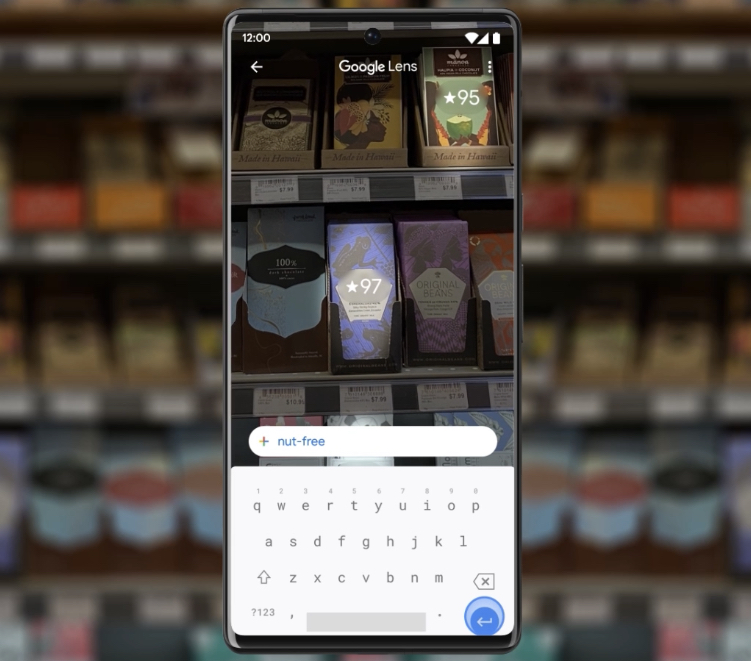

Google demonstrated scene exploration with an example of a grocery store shelf, showing how it can instantly identify items that meet a specific set of criteria.

How Does Scene Exploration Work?

When you search visually with Google using multisearch it’s currently able to recognize objects in a single frame.

With scene exploration, rolling out at some point in the future, you’ll be able to use multisearch to pan your camera and glean insights about multiple objects in a wider scene.

In a blog post, Google states:

“Imagine you’re trying to pick out the perfect candy bar for your friend who’s a bit of a chocolate connoisseur. You know they love dark chocolate but dislike nuts, and you want to get them something of quality.

With scene exploration, you’ll be able to scan the entire shelf with your phone’s camera and see helpful insights overlaid in front of you.

Scene exploration is a powerful breakthrough in our devices’ ability to understand the world the way we do – so you can easily find what you’re looking for– and we look forward to bringing it to multisearch in the future.”

Image credit: Screenshot from blog.google/products/search/search-io22/, May 2022.

Image credit: Screenshot from blog.google/products/search/search-io22/, May 2022.During the Google I/O keynote it was stated that scene exploration uses computer vision to instantly connect multiple frames that make up a scene and identify all the objects within it.

As scene explorer identifies objects it simultaneously taps into Google’s Knowledge Graph to surface the most helpful results.

Google Is Reimagining Search

Prabhakar Raghavan, Senior Vice President at Google, says on Twitter that the features announced today represent the company’s new vision for search:

Today at Google I/O, we shared our vision to make the whole world around you searchable – making it easier and more natural for you to find and explore information: https://t.co/sElJQOdPZq → A mini-thread on what this means… 🧵 #GoogleIO (1/4)

— Prabhakar Raghavan (@WittedNote) May 11, 2022

Further into the Twitter thread he adds:

“TL;DR: we believe that the way you search shouldn’t be constrained to typing keywords into a search box… we’re reimagining what it means to search again, so you can ✨search any way, and anywhere✨ (4/4)”

As Google reimagines search, businesses may have to reimagine how they optimize for it.

There may be a time in the future when businesses start asking how to optimize real-life products for Google’s scene exploration.

To that end, it remains to be seen whether scene exploration has the potential to drive more traffic to publishers.

Will scene exploration pull up any website links for users to visit? Or will Google just surface information from the Knowledge Graph?

I suspect these questions, and more, will be answered as scene exploration gets closer to launch.

Source: Google

Featured Image: Poetra.RH/Shutterstock